在 terminal, 登入到 container.

# At terminal

docker exec -ti de-2024 bash

1. 登入到 container 後.

# Set path for AIRFLOW_HOME

export AIRFLOW_HOME=/app/de/airflow

#Install AirFlow

pip3 install "apache-airflow[celery]==2.7.3" --constraint "https://raw.githubusercontent.com/apache/airflow/constraints-2.7.3/constraints-3.8.txt"

2. 執行 db migrate 產生 airflow.cfg, 並更新一些設定.

airflow db migrate

vim ./airflow.cfg

# Update dags folder (Line #7)

dags_folder = /app/de/airflow/dags

# Set timezone to your timezone (Line #36 & #1214)

# Set load_example = False (Line #106)

# Commend sql_alchemy_conn AND add new setting for MySQL (Line #424)

sql_alchemy_conn = mysql+mysqlconnector://{your_airflow_admin_account}:{your_airflow_admin_password}@airflowdb:3306/airflow_db

3. 另開一個 terminal, 登入到 airflowdb container, 建立 airflow db 及更新設定.

# At a new terminal

docker exec -ti de-2024-afdb bash

登入到 airflowdb container 後.

mysql -u root -p CREATE DATABASE airflow_db CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

CREATE USER '{your_airflow_admin_account}' IDENTIFIED BY '{your_airflow_admin_password}';

GRANT ALL PRIVILEGES ON airflow_db.* TO '{your_airflow_admin_account}';

apt-get update

apt-get install vim

vi /etc/mysql/my.cnf

.

.

.

[mysqld]

explicit_defaults_for_timestamp=1

4. 回到 Ubuntu container. Initial Airflow DB

5. 新增 Airflow account

airflow users create --username etl --firstname ETL --lastname ETL --role Admin --email etl@etl.org --password etl # start the web server, default port is 8080

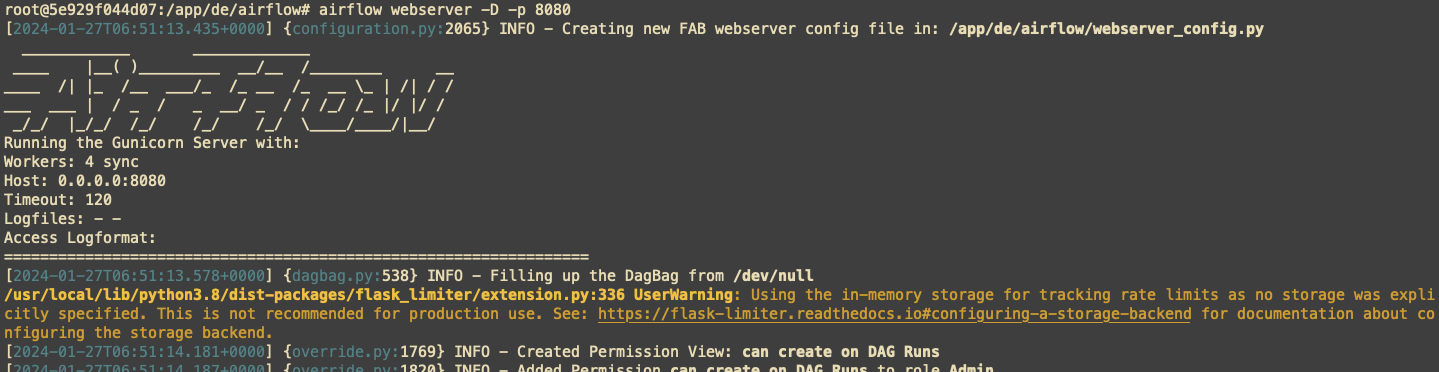

airflow webserver -D -p 8080

# start the scheduler. I recommend opening up a separate terminal #window for this step

airflow scheduler -D

7. 所以安裝都完成後, 請用瀏覽器訪問 http://localhost:8080/home

0 留言